Images that Sound Date: May 2024

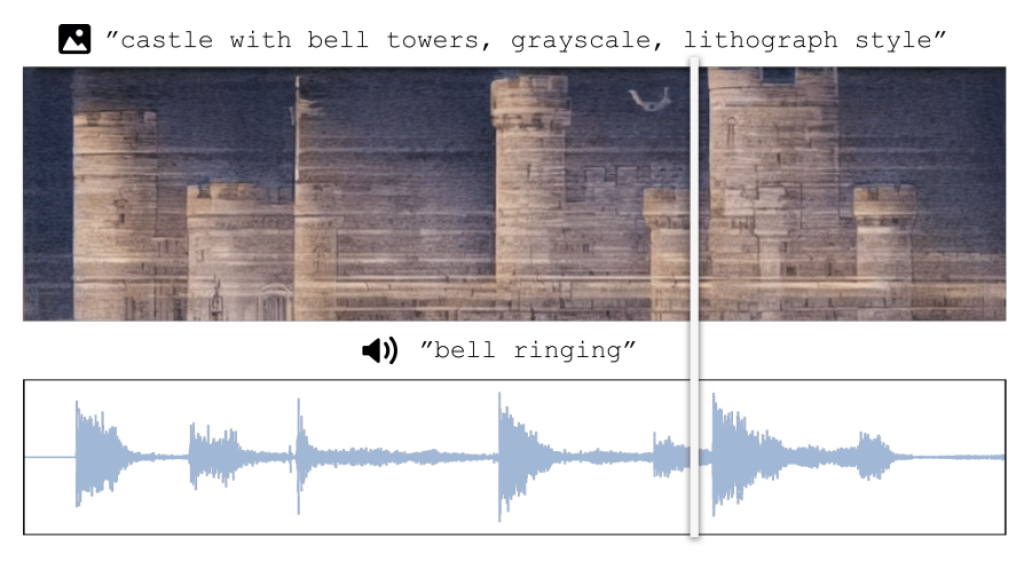

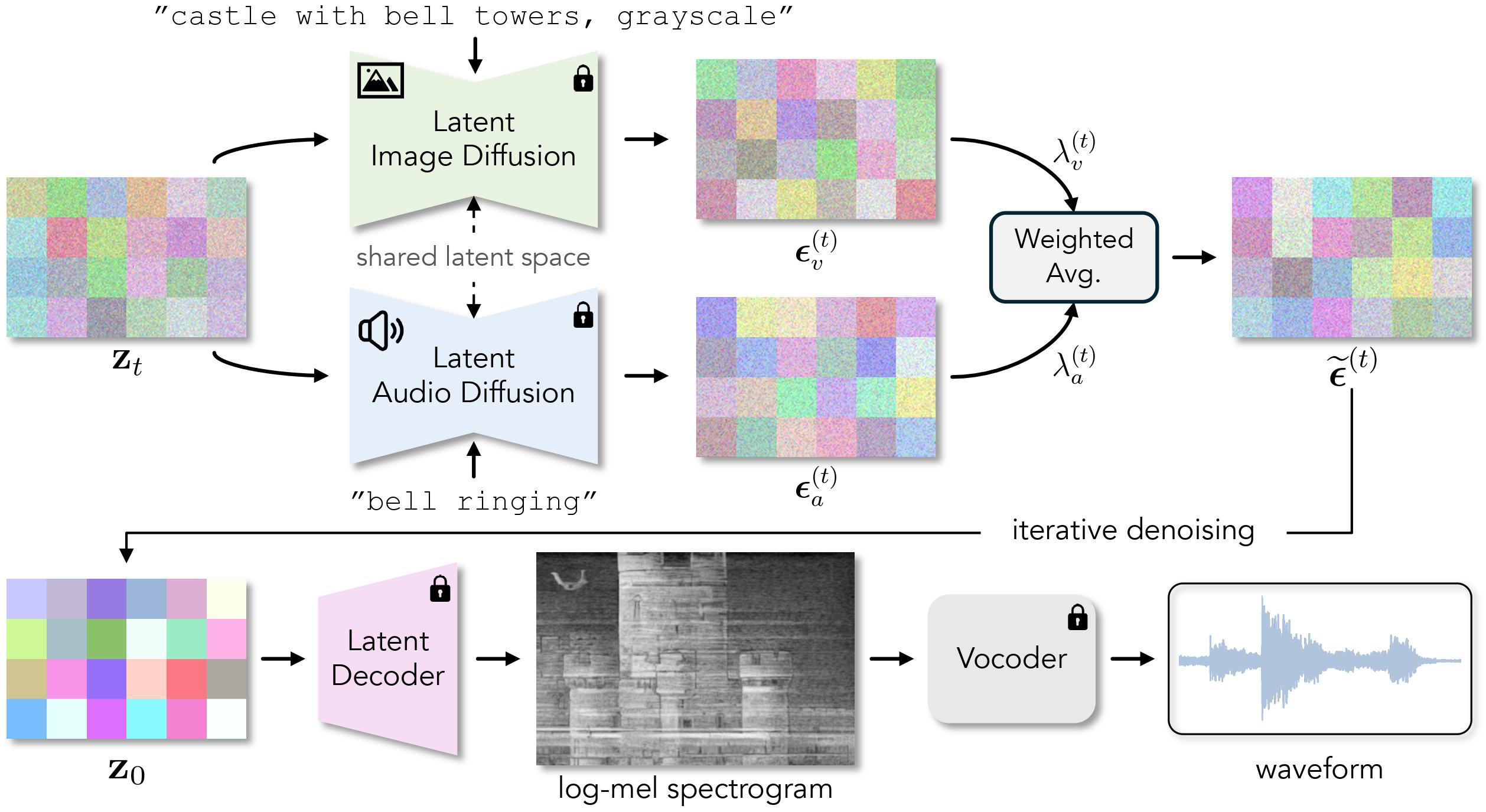

Spectrograms are 2D representations of sound that look very different from the images found in our visual world. And natural images, when played as spectrograms, make unnatural sounds. In this paper, we show that it is possible to synthesize spectrograms that simultaneously look like natural images and sound like natural audio. We call these spectrograms images that sound. Our approach is simple and zero-shot, and it leverages pre-trained text-to-image and text-to-spectrogram diffusion models that operate in a shared latent space. During the reverse process, we denoise noisy latents with both the audio and image diffusion models in parallel, resulting in a sample that is likely under both models. Please watch the videos below and listen to the results!

Idea behind this

How do we create such artworks? To make these, we need a sample that is likely under both the distributions of spectrograms and natural images. We pose it as a multimodal compositional generation task and do this by denoising using a spectrogram and an image diffusion model simultaneously.

More Results

Please check out more examples below.

More information can be found below:

– Website: https://ificl.github.io/images-that-sound/

– Paper: https://arxiv.org/abs/2405.12221

– Code: https://github.com/IFICL/images-that-sound